- Dynatrace Community

- Dynatrace

- Ask

- Container platforms

- OneAgent Installation on Openhift directly on Linux versus Operator

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Pin this Topic for Current User

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

16 Jun 2020 04:22 PM

Hi,

Can someone help me understand which one is the best approach regarding using Dynatrace on a small Openshift cluster?

From what I can see on the documentation, there are just limitations by using OneAgent Operator instead of directly installing on Linux, but I'm not so sure.

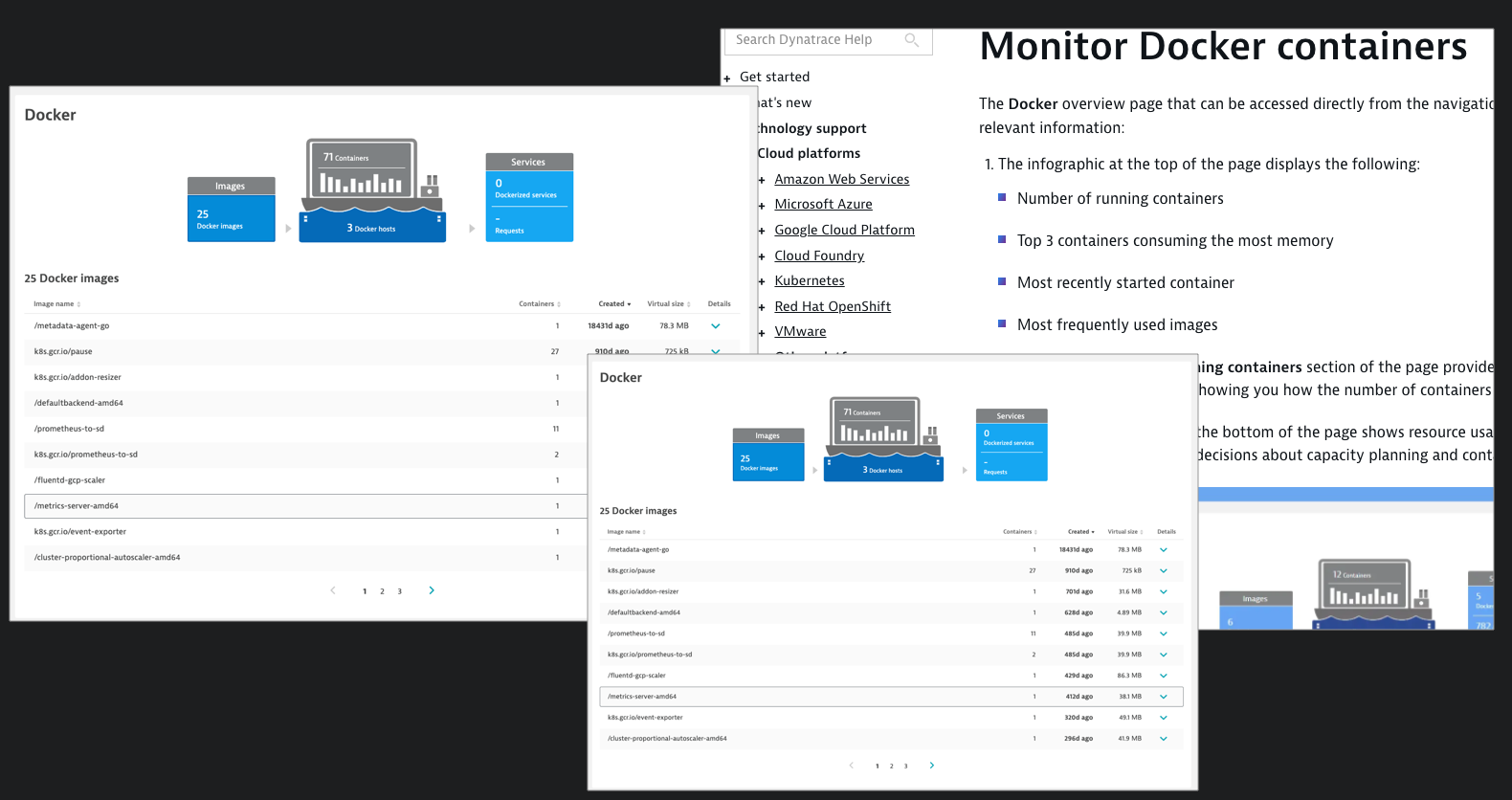

First of all, the OneAgent doesn't update automatically. (https://www.dynatrace.com/support/help/technology-support/cloud-platforms/other-platforms/docker/ins... )

Besides that, it can't grab some metrics that are outside of containers (such as JMX Metrics, other process running on server, no auto-starts, no crashes or core dumps).

Solved! Go to Solution.

- Mark as New

- Subscribe to RSS Feed

- Permalink

16 Jun 2020 04:51 PM

For the first point, it works differently but the agent does (or can) update automatically. Instead of just updating the agent though the Operator itself deploys a new pod with the updated agent or you can specify a specific version that should be used. But using the operator does not mean you need to manually update agents.

See the section here with the example parameters at step 6. Auto updates are on by default but can be disabled: https://www.dynatrace.com/support/help/shortlink/openshift-manage-helm#installation

For point 2 that this is correct. Using the operator the agent gets deployed as a privileged container/pod and so all the limitations of monitoring from a container are still true.

The big motivation for the operator would be for 'day 2 operations' like if you had large or many clusters as well as it can do things like configure Istio to work with Dynatrace and other things can be added down the line.

In your case if you have a small cluster and you think you'd want to use any of the functionality that shows up as a limitation with the container based/operator approach I would just go with installation of a full OneAgent on those nodes. If down the line we do add functionality to the Operator that you would like to use it should be painless to swap over if it's a small cluster as well.

Lastly, not for you but for anyone else who comes across this, I just want to clarify for them that we're referring to deploying the OneAgent itself as a container, not the monitoring of containers.

- Mark as New

- Subscribe to RSS Feed

- Permalink

18 Jun 2020 07:15 AM

Your options are to either (a) install the full stack agent on each host per [1] or install it using the operator per [2].

If you install using [1] you will have install the agent on each node, one-by-one. You will also have to recycle your pods when you want to apply an update.

If you install using [2] you will automatically get a K8s DaemonSet on each node, and you will not have to recycle your pods when an update is available.

That page you reference [3] is a bit confusing. It's really for folks who have installed OneAgent directly into a docker container, because they don't have a container platform like OCP or K8s. We should make this much clearer. I would ignore the page, as it's not applicable to you.

- Mark as New

- Subscribe to RSS Feed

- Permalink

18 Jun 2020 07:28 AM

Additionally, I am thinking these pages, and help pages, remain for legacy reasons. Perhaps this would provide a Docker Swarm customer some insights, but as Docker EE has shifted towards Kubernetes, the number of folks using these old Docker pages will continue to decline. The Kubernetes page connects to the cluster API and gets all sorts of valuable cloud native things that this will not offer. We'll phase this pure "docker" page out eventually, especially since we now get generic cgroup metrics from Containerd and CRIO the same way as we used to from Docker.