- Dynatrace Community

- Dynatrace

- Ask

- Real User Monitoring

- Re: Reduce/prevent Google Search crawling RUM beacon URL's

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Pin this Topic for Current User

- Printer Friendly Page

Reduce/prevent Google Search crawling RUM beacon URL's

- Mark as New

- Subscribe to RSS Feed

- Permalink

25 Jan 2024 07:04 AM

Hi community,

Our SEO's are seeing that a large amount of our google crawl budget is being used on Dynatrace RUM Beacon URL's (/rb_XXXXX). This is a pity as the budget should of course go to customer pages (and for some reason customers aren't interested in RUM beacon info.... 😋)

I've discussed this matter earlier with Dynatrace and they've indicated either using the robots.txt or change the beacon URL to a different domain (Google allocates crawl budget per domain). Currently there's apparently no option within the settings to add the NOINDEX option.

The robot.txt isn't optimal as we sometimes still see Google crawling the URL's. Preferably I like to remain as much as possible with Dynatrace out of the box configuration. Therefore changing the beacon URL is something I don't prefer as well (I'm using the oneagent) plus the crawling issue remains and is pushed to a different domain.

Any suggestion how we nicely prevent google crawling these useless URL's?

Kind regards,

Daan

- Mark as New

- Subscribe to RSS Feed

- Permalink

27 Mar 2024 06:09 PM

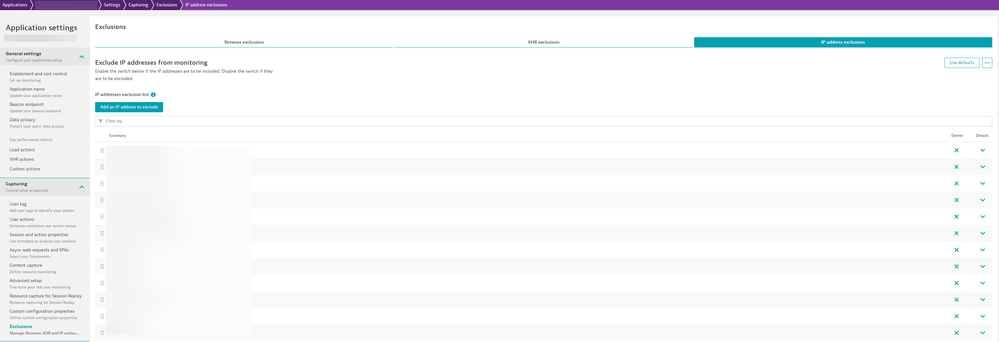

There isnt a good one for all solution. What you can do is block the recording of IPs. Yeah it might be a never ending battle adding in new IPs/ranges and it an app by app basis so the API would come in hand to post the rules to all apps.

Just food for thought: